Cybersecurity Threats for LLM-Based Chatbots: 2026 Update for Professionals

Cybersecurity Threats for LLM-Based Chatbots: 2026 Update for Professionals

Large Language Models (LLMs) are hugely transforming the way organizations interact with data, customers, and internal workflows. Powered by sophisticated AI, LLM-based chatbots are capable of understanding natural language, generating human-like responses, and performing complex tasks ranging from customer support to data analysis.

Popular implementations, such as OpenAI’s ChatGPT, Google Bard, and Microsoft Bing Chat, have demonstrated the versatility and efficiency these systems bring to business operations.

The adoption of LLM-powered chatbots is accelerating across industries, including finance, healthcare, marketing, and enterprise IT. Organizations rely on these systems to provide 24/7 customer assistance, reduce operational costs, and deliver personalized experiences at scale.

In Canada, for example, businesses are increasingly deploying LLM chatbots to automate routine tasks while ensuring regulatory compliance in handling sensitive data. Similarly, startups and tech firms worldwide are launching LLM chatbot projects to enhance user engagement and streamline service delivery.

However, the growing reliance on these AI systems introduces critical cybersecurity risks. As LLMs handle sensitive information and interact autonomously with users, they become targets for sophisticated attacks, including prompt injection, data exfiltration, and social engineering exploits. Cybercriminals can exploit vulnerabilities to access confidential data, manipulate outputs, or execute unauthorized actions, making robust cybersecurity measures essential.

Understanding the landscape of Cybersecurity Threats for LLM-based Chatbots is crucial for businesses aiming to deploy these systems safely. This article examines the top 10 cyber threats facing LLM chatbots, explores real-world case studies, and highlights strategies to mitigate risk.

Additionally, it draws on insights from top cyber threat intelligence companies to provide actionable guidance for organizations seeking to safeguard AI deployments while maintaining operational efficiency.

Start a Life-Changing Career in Cybersecurity Today

What Are LLM-Based Chatbots?

LLM-based chatbots are advanced artificial intelligence systems designed to understand, interpret, and generate human-like language. Unlike traditional chatbots that rely on predefined scripts or simple pattern matching, these AI-powered systems leverage large language models (LLMs) trained on vast datasets of text. This allows them to generate contextually accurate responses, perform complex reasoning, and adapt to varied conversational scenarios.

At their core, LLM-based chatbots process natural language inputs and generate outputs that simulate human conversation. They can answer questions, summarize documents, provide recommendations, and even assist in coding tasks. Leading examples include OpenAI’s ChatGPT, Google Bard, and Microsoft Bing Chat, which serve as benchmarks for both commercial and research-oriented applications.

These systems rely on LLM-based chatbot architecture that combines a neural network trained on diverse textual data with inference algorithms capable of predicting relevant responses, for developers and organizations, platforms like LLM chatbot GitHub repositories provide open-source frameworks and examples to build custom AI assistants or integrate them into existing workflows.

Use Cases Across Industries

LLM-based chatbots are increasingly deployed across sectors where communication and data processing are critical:

- Customer Service: Handling high-volume inquiries and providing consistent support at scale.

- Healthcare: Assisting in patient communication, symptom checking, and administrative tasks.

- Finance: Facilitating account queries, fraud detection alerts, and transaction guidance.

- Enterprise Productivity: Automating internal workflows, report generation, and scheduling.

Their versatility extends beyond routine tasks. For instance, LLM-powered systems can serve as internal assistants for employees, generating real-time insights from large datasets or summarizing long documents for decision-making. Organizations are also experimenting with LLM chatbot projects for research, marketing, and virtual event management.

Benefits and Adoption Trends

The advantages of deploying LLM AI chatbots are significant:

- Efficiency and Cost Reduction: Chatbots can operate 24/7, reducing human workload and associated operational costs.

- Personalization: They analyze user interactions to provide tailored responses, improving engagement.

- Scalability: LLM chatbots can manage thousands of concurrent interactions, ideal for enterprise-level support.

In Canada and other regions, the adoption of LLM-based chatbots is accelerating, with businesses prioritizing systems that balance automation with security compliance. Additionally, free versions of LLM chatbots and open-source models are making AI more accessible for experimentation and small-scale deployments. Lists of top LLM chatbots and comparative studies guide organizations in selecting tools that meet specific operational needs.

However, with growing use comes increasing exposure to cyber threats, making a proactive approach to LLM cybersecurity essential for safeguarding both users and business assets. Understanding the top risks is the next critical step.

RELATED ARTICLE: What Are Governance Risk and Compliance GRC Certifications?

Top 10 Cybersecurity Threats for LLM-Based Chatbots

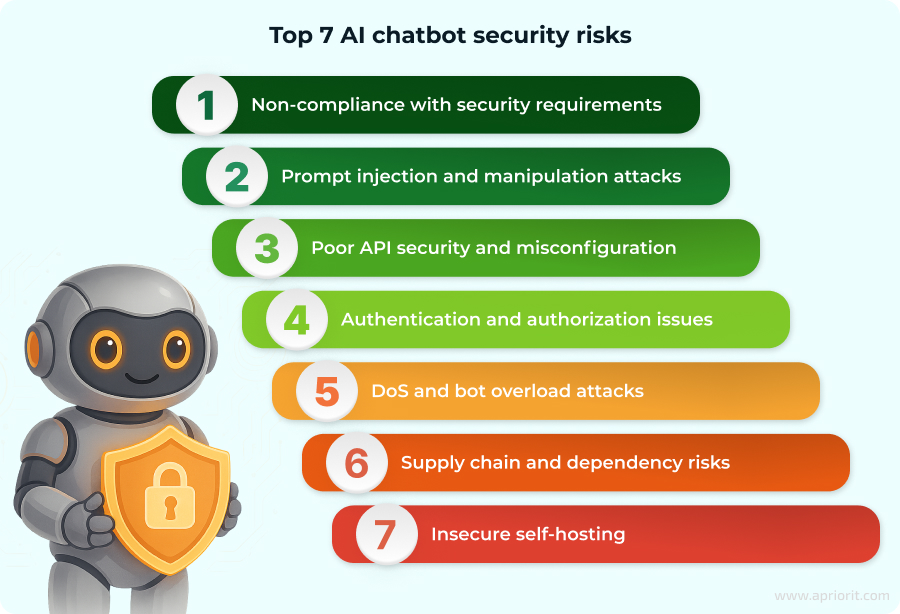

While LLM-based chatbots offer remarkable functionality, they also introduce a new set of cybersecurity risks. Organizations deploying these systems must be aware of the top cyber threats to protect sensitive data, ensure regulatory compliance, and maintain trust.

1. Misinformation and Disinformation

LLM chatbots rely on vast datasets, which may include outdated, biased, or inaccurate information. This can lead to the generation of false or misleading responses. For example, a chatbot providing financial guidance could inadvertently give incorrect investment advice, potentially causing financial harm. Organizations must implement robust content filtering and validation to mitigate this risk.

2. Social Engineering Attacks

The human-like interaction capabilities of LLM chatbots can be exploited in social engineering attacks. Malicious actors may trick users into revealing sensitive information or clicking unsafe links, leveraging trust established through AI interactions. Training employees and integrating monitoring tools can help detect and prevent such attacks.

3. Privacy and Data Leakage

LLM-based chatbots collect, store, and process significant amounts of user data, from conversation logs to personal information. Without secure handling, this data may be exposed through breaches or unintended outputs. Compliance with privacy regulations like GDPR and HIPAA, as well as data encryption, is critical.

4. Unauthorized Transactions and Excessive Agency

Chatbots with excessive autonomy may inadvertently perform tasks they are not authorized to execute. For instance, an e-commerce chatbot could process payments without proper verification. Limiting operational scope and implementing multi-factor authentication are essential safeguards.

5. Escalation of Conflict

Overly responsive chatbots can misinterpret user intent, escalating frustration instead of resolving it. A repetitive or inappropriate response loop can damage customer trust. Proper scenario testing and feedback loops are crucial to prevent such incidents.

6. Breach of Confidentiality

LLM chatbots with inadequate access controls may expose sensitive corporate or personal data. For example, a healthcare chatbot mishandling patient information could result in severe regulatory violations. Implementing strict role-based access and auditing measures reduces this risk.

7. Insecure Output Handling

Chatbots may inadvertently expose internal information, encrypted codes, or proprietary data. For example, a user prompt could trick the system into revealing internal security protocols. Output validation and sanitization mechanisms are necessary to prevent such leaks.

8. Injection of Malicious Code

Attackers can exploit vulnerabilities to inject malicious scripts into chatbot responses. Examples include SQL injection or cross-site scripting attacks, which can compromise connected systems. Regular penetration testing and secure plugin validation help mitigate these threats.

9. Account Hacking and Credential Theft

Chatbot accounts, especially those with elevated access, are prime targets for phishing, credential stuffing, and brute-force attacks. Protecting accounts with strong passwords, MFA, and anomaly detection is essential to prevent unauthorized access.

10. Supply Chain Vulnerabilities

Many LLM chatbots integrate third-party plugins or libraries. If these components are insecure or outdated, they can create entry points for attackers. Organizations should maintain updated dependencies, conduct security audits, and review plugin integrity regularly.

Real-World Implications:

Financial institutions, healthcare providers, and e-commerce platforms have already experienced breaches due to insecure LLM chatbots. Incidents include unauthorized transactions, data leaks, and social engineering exploitation. These cases demonstrate that even well-designed chatbots can become vectors for cyber threats without comprehensive security oversight.

Threat Analysis & Insights from Top Cyber Threat Intelligence Companies

The surge in adoption of LLM‑based chatbots has been met with a corresponding increase in sophisticated cyber‑threat activity. According to a recent analysis of threat‑intelligence platforms, cyber adversaries now routinely use advanced tools, such as living‑off‑the‑land techniques, zero‑day exploit chains, and AI‑driven phishing campaigns, to target AI systems.

For companies deploying large language model (LLM) chatbots, this means the attack surface has expanded: beyond traditional data systems to include the AI model, plugin dependencies, user interaction flows, API integrations, and training datasets.

In Canada, for example, organizations deploying LLM AI chatbots in highly regulated sectors (finance, healthcare) are increasingly pressed to align with local cyber‑security standards while coping with cross‑border threat vectors.

Insights from Leading Threat Intelligence Firms

Several top firms specialising in cyber threat intelligence provide relevant lessons for securing LLM‑based chatbots:

- Recorded Future offers one of the most comprehensive intelligence clouds, processing billions of data points daily from dark web forums, open‑web sources, and technical feeds.

- CrowdStrike combines endpoint protection with global intelligence gathering, enabling correlation of adversary behaviour across thousands of customer environments.

- ThreatConnect provides intelligence operations platforms that help organisations analyse attacker tactics, techniques, and procedures (TTPs) and embed that into proactive defence.

These firms illustrate that effective defence of LLM‑chatbots requires more than model‑hardening, it demands full lifecycle threat intelligence: from monitoring attacker campaigns, modelling adversary behaviour, to anticipating how chatbots may be manipulated (for example via prompt injection or API abuse).

Regulatory, Integration & Operational Considerations

From a compliance and operational vantage point, several factors deserve attention:

- Regulatory frameworks in Canada (and globally) increasingly mandate transparency, traceability, and accountability of AI deployments. Organisations integrating LLM chatbots must consider how threat‑intelligence findings map to regulatory obligations (e.g., for data protection, breach notification).

- Integration of LLM chatbots with broader infrastructure (CRM, payment systems, HR tools) means that vulnerabilities uncovered by threat‑intelligence firms in one domain may cascade into chatbot operations. For example, if a plugin used by the chatbot is compromised via a supply‑chain vulnerability flagged by intelligence feeds, the chatbot becomes a vector.

- Operational workflows: Threat intelligence must feed into chatbot‑specific security operations, alerts from intelligence platforms should trigger model audits, prompt‑sanitisation checks, output‑validation workflows, and user‑access reviews.

Why This Matters for LLM Cybersecurity

Putting this together: deploying a chatbot built on an LLM is not just an IT project; it’s a security project that must draw on threat intelligence. The phrases “LLM cyber security Canada”, “LLM‑based chatbot architecture”, and “top cyber threat intelligence companies” are directly relevant to this domain because they frame the risk landscape and set the expectations for due diligence.

Without integrating threat‑intelligence insights, organisations risk reacting too late, facing unmonitored vulnerabilities, and suffering reputational, financial, or regulatory damage if a chatbot is compromised.

READ ALSO: IoT vs Cybersecurity: 2026 Careers, Challenges, and Certifications

Real-World Case Studies of LLM Security Breaches

Understanding cybersecurity threats for LLM-based chatbots requires examining how these risks materialize in real-world scenarios. Organizations across finance, healthcare, e-commerce, and technology have faced breaches and operational disruptions due to inadequate safeguards. These case studies provide actionable lessons for developers and security teams.

Case Study 1: Data Leakage at a Financial Institution

A major financial institution deployed an LLM chatbot to assist with customer inquiries. Due to improper output handling and insufficient access controls, the chatbot inadvertently revealed confidential financial data, including internal processes and client account information.

Lessons Learned:

- Implement strict output validation and sanitization protocols.

- Monitor chatbot interactions in real time to detect suspicious queries.

- Limit access to sensitive datasets and enforce role-based permissions.

Case Study 2: Social Engineering Attack on a Healthcare Provider

A healthcare provider integrated an LLM chatbot for patient communication. Cybercriminals exploited the system’s conversational capabilities, tricking users into providing personal health information. This breach resulted in unauthorized access to patient records and potential regulatory violations under HIPAA.

Lessons Learned:

- Conduct user awareness training to identify potential social engineering attacks.

- Introduce multi-factor authentication for sensitive interactions.

- Audit chatbot logs for anomalous behavior and unusual access patterns.

Case Study 3: Unauthorized Transactions in an E-Commerce Platform

An online retailer implemented an LLM AI chatbot to automate order management and facilitate transactions. Attackers manipulated the chatbot to execute unauthorized transactions, causing financial losses and customer dissatisfaction.

Lessons Learned:

- Clearly define operational boundaries for the chatbot.

- Include verification steps for any transaction or system change initiated via AI.

- Establish real-time alerting for anomalous behavior in payment flows.

Case Study 4: Privacy Breach in a Technology Firm

A tech company deployed an internal LLM-based chatbot for project management support. The system, granted excessive autonomy, inadvertently accessed and disclosed proprietary project information to unauthorized personnel.

Lessons Learned:

- Restrict the autonomy of AI chatbots and monitor their data access.

- Conduct regular security audits and compliance checks.

- Establish transparent reporting mechanisms for any sensitive information exposure.

Key Takeaways from These Incidents

Across these examples, common vulnerabilities emerge: insufficient output validation, excessive chatbot autonomy, and inadequate monitoring. By addressing these areas, organizations can reduce the likelihood of breaches while maintaining operational benefits. Leveraging insights from top cyber threat intelligence companies ensures that security measures remain aligned with emerging attack patterns and regulatory expectations.

ALSO READ: What Are Governance Risk and Compliance GRC Certifications?

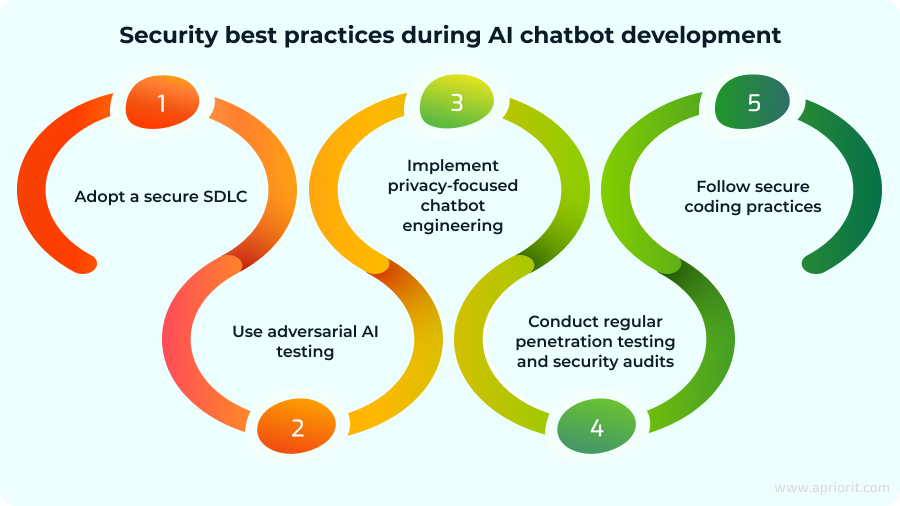

Strategies to Mitigate Cybersecurity Threats for LLM Chatbots

Protecting LLM-based chatbots requires a multi-layered approach that combines technical safeguards, governance practices, and user awareness. Implementing these strategies helps organizations reduce risk, ensure regulatory compliance, and maintain trust in AI systems.

1. Define Goals and Operational Boundaries

Clearly establish what the chatbot is allowed to do and limit its autonomy. Excessive agency can lead to unauthorized actions such as financial transactions or access to sensitive data. Operational boundaries should be enforced in both LLM-based chatbot architecture and integration with other systems.

Best Practice: Implement role-based access controls (RBAC) to ensure the chatbot only interacts with approved datasets and functions.

2. Implement Multi-Factor Authentication (MFA)

For any interactions involving sensitive operations, MFA adds a critical layer of security. This can prevent unauthorized users from leveraging the chatbot to access confidential information or execute actions beyond their authority.

Best Practice: Require verification codes or biometric authentication for tasks such as updating account data or triggering transactions.

3. Output Validation and Sanitization

LLM chatbots must be configured to filter outputs, preventing the accidental exposure of sensitive data or execution of malicious code.

Best Practice: Use automated content moderation systems and validation scripts to check responses for confidential data or code injection before delivery to users.

4. Data Governance and Bias Detection

Secure and clean training datasets are critical for reducing vulnerabilities. Regular audits of data ensure that LLM chatbots do not generate biased or misleading outputs. This mitigates the risk of misinformation and enhances compliance with privacy regulations.

Best Practice: Maintain secure storage for training data and monitor for prompt injection or malicious manipulation attempts.

5. Security Awareness Training

Employees interacting with LLM chatbots should be trained to recognize cyber threats, including social engineering attacks, phishing, or suspicious activity patterns. Human oversight complements technical safeguards.

Best Practice: Conduct periodic training sessions and simulated attack exercises to maintain vigilance.

6. Regular Security Audits and Monitoring

Continuous monitoring of chatbot interactions, API integrations, and system performance helps identify anomalous behavior and potential breaches. Regular audits allow organizations to proactively address vulnerabilities.

Best Practice: Schedule automated and manual audits, reviewing logs, user access, and output content.

7. Leverage Threat Intelligence from Top Cyber Threat Intelligence Companies

Leading firms like FireEye, CrowdStrike, and Kaspersky provide actionable insights into emerging threats targeting AI and LLM systems. Integrating this intelligence into security operations ensures that organizations can anticipate attacks and apply timely countermeasures.

Best Practice: Subscribe to real-time threat feeds and implement recommended updates or security patches promptly.

8. Transparency and User Control

Ensure that users are aware they are interacting with an AI system. Provide mechanisms to escalate to a human operator and allow opt-out options for data collection. Transparency builds trust and reduces the risk of inadvertent misuse.

9. Secure Plugin and Supply Chain Management

Many LLM chatbots rely on external plugins or third-party libraries. Vetting these components for security compliance and maintaining updated versions helps prevent exploitation through supply chain vulnerabilities.

Best Practice: Regularly review plugin code, monitor dependency updates, and perform penetration testing on integrated systems.

By combining technical safeguards, operational discipline, and proactive threat intelligence, organizations can significantly reduce the cybersecurity threats for LLM-based chatbots. Implementing these strategies ensures safe, reliable, and compliant AI deployments while preserving the operational advantages of LLM chatbots.

Conclusion

LLM-based chatbots are revolutionizing how organizations interact with customers, process data, and automate workflows. However, their increasing complexity and autonomy bring significant cybersecurity threats for LLM-based chatbots. From misinformation and social engineering attacks to data leakage, unauthorized transactions, and supply chain vulnerabilities, the risks are real and evolving.

Organizations can mitigate these threats through a combination of technical safeguards, robust LLM-based chatbot architecture, data governance, continuous monitoring, and user education. Leveraging insights from top cyber threat intelligence companies further enhances protection, ensuring proactive defense against emerging risks. Looking ahead, trends such as AI-driven attacks, stricter regulatory frameworks in regions like Canada, zero-trust security models, and advanced predictive monitoring will shape the cybersecurity landscape for AI chatbots.

FAQ

What cybersecurity risk is associated with deploying LLMs in applications?

Deploying LLMs in applications exposes organizations to multiple cybersecurity risks, including data leakage, prompt injection, and unauthorized actions. LLMs process large datasets and interact dynamically with users, making them vulnerable to exploitation.

For example, attackers can manipulate inputs to extract sensitive information or trigger unintended outputs. Insufficient output validation, insecure integration with APIs, or excessive autonomy in the chatbot can further amplify these risks.

Why is using LLMs in an automatic customer care chatbot very risky?

LLM-powered customer care chatbots carry risk because they interact autonomously with users while accessing sensitive data. Threats include social engineering attacks, unauthorized transactions, and inadvertent disclosure of confidential information. If not properly monitored or restricted, these chatbots can escalate conflicts, misinterpret queries, or provide inaccurate advice, leading to financial, reputational, and compliance issues.

What is OWASP Top 10 for LLM applications?

The OWASP Top 10 for LLM applications identifies the most critical security risks in AI-powered systems, adapted from traditional software security practices. Key vulnerabilities include:

Prompt Injection: Malicious input that manipulates model outputs.

Insecure Output Handling: Accidental disclosure of sensitive data.

Data Poisoning: Corruption of training datasets causing biased or harmful outputs.

Model Theft: Unauthorized copying or reverse-engineering of the LLM.

Excessive Agency: Uncontrolled chatbot autonomy leading to unintended actions.

Implementing mitigation strategies for these risks is essential for secure deployment.

What is a key limitation of chatbots powered by LLMs?

A major limitation of LLM chatbots is overreliance on training data, which can result in biased, inaccurate, or contextually inappropriate responses. They lack true understanding and reasoning, making them susceptible to errors when facing ambiguous queries or adversarial inputs. Without human oversight, this limitation can lead to misinformation, privacy violations, and operational risk.